Andy Kerber (@dbakerber), Senior Consultant

In this blog I will examine the effects ‘setall’ and ‘none’ on the Oracle initialization parameter filesystemio_options. This setting is used to set I/O parameters for the Oracle database when the data files are stored on file system rather than ASM.

Test Environment

The Oracle database version is 12.1.0.2, and the OS version is RHEL 7.6. The VMware Workstation and Oracle VirtualBox versions are the most recent available – VirtualBox version 5.2.12r122591 and VMware Workstation Pro version 12.5.7 build-5813279. The NMON analyzer version is 5.21 and SLOB version is 2.4.0.

Explanation of filesystemio_options

I/O operations in UNIX and Linux systems typically go through the file system cache. Although this doesn’t represent a problem in itself, this extra processing does require resources. Bypassing the file system cache reduces CPU requirements, and frees up the file system cache for other non-database related file operations. Operations against raw devices automatically bypass the file system cache.

When a synchronous I/O request is submitted to the operating system, the writing process blocks until the write is complete before continuing to process. With asynchronous I/O, processing continues while the I/O request is submitted and processed. This allows asynchronous I/O to bypass some of the performance bottlenecks associated with I/O operations.

Oracle can take advantage of direct I/O and asynchronous I/O on supported platforms using the FILESYSTEMIO_OPTIONS parameter, the possible values for which are listed below.

- ASYNCH – enables asynchronous I/O where possible

- DIRECTIO – enables direct I/O where possible

- SETALL – enables both direct I/O and asynchronous I/O where possible

- NONE – disables both direct I/O and asynchronous I/O

Configuration

Both VMs were created as new VMs, and each VMDK was pre-allocated to full size. The VM (on both VirtualBox and VMware Workstation) were created with 16G RAM and 1 CPU. Swap size was set to 16G also. A second VMDK was added for the Oracle datafiles, size 80G (once again, pre-allocated). This was mounted on /u02. In each, an Oracle database was created SID iotest, and disk_asynch_io=TRUE.

The SLOB tablespace was created with the tablespace name slob, and pre-allocated to 32G in size in order to eliminate waits on file extension. We created 64 slob schemas, and tested with 32 schemas using this command: ./runit.sh 32

Testing Process

In the testing process, filesystemio_options was set to setall in the spfile, the system rebooted and the database started. Each time the filesystemio_options setting was changed, the system was rebooted in order to make sure there was no caching interfering with the process. Each test ran for one hour. It was expected that the filesystemio_options=setall would produce measurably, though not substantially better, I/O performance than filesystemio_options=none.

Results

However, the results of the test were surprising, and consistent on both VirtualBox and VMware Workstation.

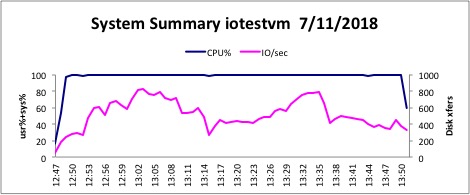

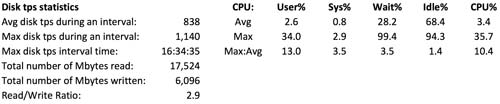

Below is a typical graph from NMON analyzer with filesystemIO_options=SETALL:

In the AWR reports we see this for the top foreground wait events:

Top 10 Foreground Events by Total Wait Time ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Total Wait Wait % DB Wait Event Waits Time (sec) Avg(ms) time Class ------------------------------ ------- ---------- -------- ----- -------- db file sequential read 55,702 11.1K 199.49 74.1 User I/O db file parallel read 7,101 3890.4 547.86 25.9 User I/O DB CPU 17.9 .1 cursor: pin S wait on X 53 2.9 55.09 .0 Concurre library cache load lock 93 2.3 24.25 .0 Concurre db file scattered read 47 2 41.62 .0 User I/O read by other session 107 1.6 15.19 .0 User I/O log file sync 27 1.5 55.75 .0 Commit Disk file operations I/O 63 1.3 21.14 .0 User I/O control file sequential read 123 1.1 9.20 .0 System I Wait Classes by Total Wait Time ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Avg Avg Total Wait Wait % DB Active Wait Class Waits Time (sec) (ms) time Sessions -------------- ---------- ------------ ------- ------ -------- User I/O 71,514 15,558 217.55 103.7 8.3 System I/O 14,235 1,071 75.23 7.1 0.6 DB CPU .1 0.0 Concurrency 203 6 29.23 .0 0.0 Commit 38 2 49.41 .0 0.0 Other 526 2 3.04 .0 0.0 Network 80 0 0.00 .0 0.0

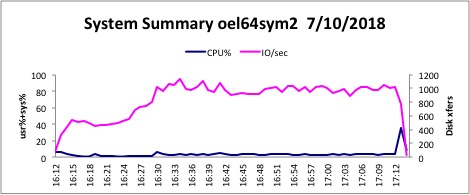

Below is typical output when filesystemIO_options=NONE.

Top 10 Foreground Events by Total Wait Time

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Total

Wait

Event Waits Time Wait % DB Wait

(sec) Avg(ms) time Class

------------------------------ ---------- ------- -------- ----- --------

free buffer waits 2,528,630 26.2K 10.35 90.0 Configur

db file parallel read 226,393 1569 6.93 5.4 User I/O

db file sequential read 1,603,321 320.2 0.20 1.1 User I/O

buffer exterminate 19,919 200.4 10.06 .7 Other

DB CPU 138 .5

write complete waits 47 136.9 2912.46 .5 Configur

latch: cache buffers chains 16,208 135 8.33 .5 Concurre

latch: cache buffers lru chain 26,913 116.8 4.34 .4 Other

db file scattered read 1,513 49 32.39 .2 User I/O

log buffer space 99 23.5 237.62 .1 Configur

Wait Classes by Total Wait Time

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Avg Avg

Total Wait Wait % DB Active

Wait Class Waits Time (sec) (ms) time Sessions

-------------- ------------ ---------------- ---------- ------ --------

Configuration 2,792,212 29,067 10.41 100.0 8.1

System I/O 23,147 3,611 155.99 12.4 1.0

User I/O 1,867,661 1,953 1.05 6.7 0.5

Other 53,904 396 7.34 1.4 0.1

Concurrency 18,577 229 12.32 .8 0.1

DB CPU 138 .5 0.0

Commit 50 1 13.11 .0 0.0

Network 72 0 0.00 .0 0.0

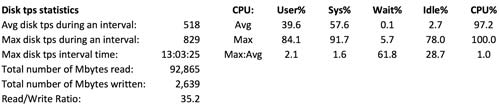

As you can see, it appears that filesystemIO_options=SETALL is being choked by the CPU resource, while filesystemIO_options=NONE has plenty of CPU available. Most likely the choke point is simply the I/O bandwidth.

At one of our recent clients, setting the filesystemio_options=setall in an Oracle database running in vSphere virtualization on enterprise level storage caused a roughly 30 percent improvement in the I/O performance of the database.

Conclusions

What are the implications of this? Most obviously, the filesystemIO_options setting on a VM probably needs to be evaluated on an individual basis when using filesystem. Additionally, it may imply that the ASM is the better choice in a virtualized environment. While the results of this experiment have been interesting, I plan to follow up with more research in more powerful environments in the future in order to determine how much the underlying hardware affects the recommendations for this setting.