Shawn Meyers (@1dizzygoose), Principal Architect

Recently while at a client site, I ran into a situation that stumped me for a bit and shows why best practices exist. This experience reminded me that max server memory is important, and forgetting to set it can cause some unexpected issues.

While looking at a different issue at a client site, I noticed an Availability Group secondary node using 25% of the CPU on a server. The primary node was only using 2%, and the other secondary was only using 2% CPU, and the virtual machines were all running on same type of hardware. Why the large gap? Below is my troubleshooting process.

First, I assumed there was an additional database being overly used on this instance, but that wasn’t part of the Availability Group. I checked this out, but it wasn’t the case.

Next I thought it was something to do with the virtual layer. But that investigation also led nowhere.

SQL Server logs were also all clean, no warnings or errors.

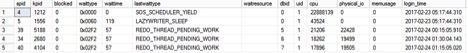

Digging deeper into what was using all the CPU, I saw that SPID 4, the resource monitor was using all of the CPU. With either a SOS_SCHEDULER_YIELD wait type or PREEMPTIVE_XE_CALLBACKEXECUTE wait type. Many of the scripts I use I factor out system SPIDs, so it took me a bit longer to get there than it should have.

PREEMPTIVE_XE_CALLBACKEXECUTE is a new wait type for me. So I used Paul Randal’s SQLskills.com to look up the wait type and learn more about it. However, it wasn’t much help, and Paul stated that he hasn’t seen it be a noticeable contention point.

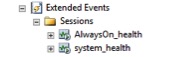

Oh, but it is an XE wait type. So let’s look at what extended events someone built that could be causing issues. However, I only see the defaults.

But just to be safe, I stopped both. No change.

So off to Google I go, and I found the following link:

This TechNet post describes the problem exactly. It reports the problem resolved, but doesn’t report a solution. I have added a solution, so others will find it and know the answer.

Then I found this Microsoft support article. It talks about the issue of system processes using too much memory as all the system processes execute trimming methods. Free memory looked good when I checked earlier, but this article got me thinking about what has changed at my client’s site.

Now this is a WSFC with four nodes, and three instances installed on each node with one AG per instance. Stacking instances is done to save on licensing and vendor applications, but using the same database names prevented from us from using one instance with multiple AGs. We can only have one configDB per instance, and we have two applications that have a configDB name hard coded (bad vendor, no cookies for you, not naming names).

So the system has been running this way for three years. Then last month the client added a third instance and third AG, to create a new environment that allows for a side-by-side upgrade of the application. Now they have the old version running with most staff, and the new version running with some staff. The third instance has been running for over a month.

Last weekend, the client moved nearly everyone to the new version, which is when the problem started. When they added the new instance, max server memory wasn’t set. So when the new database wanted more memory, it just grabbed what it wanted. This caused low memory issues on the server and caused the instances to fight each other. The CPU was being hit so hard by trying to trim working sets over and over again. Normally when I see max server memory not set the symptoms are very different – usually showing memory pressure and out of memory symptoms, or seeing buffer pressure messages in the SQL Server error logs.

Since there are multiple instances, and two of them were AG secondaries only, they gave up nearly all of their memory to the one instance that used all the memory. They were doing a bunch of trim exercises in the background, and using up all of the CPU. However, setting max server memory solved the problem. This highlights another reason not to stack instances if you can avoid it, or if you must, to always set max server memory when installing a new instance.

The moral of the story is to ensure you are following best practices by performing regular health checks of your servers, so you can catch mistakes in configuration before they impact performance.