Joe Grant, Principal Architect

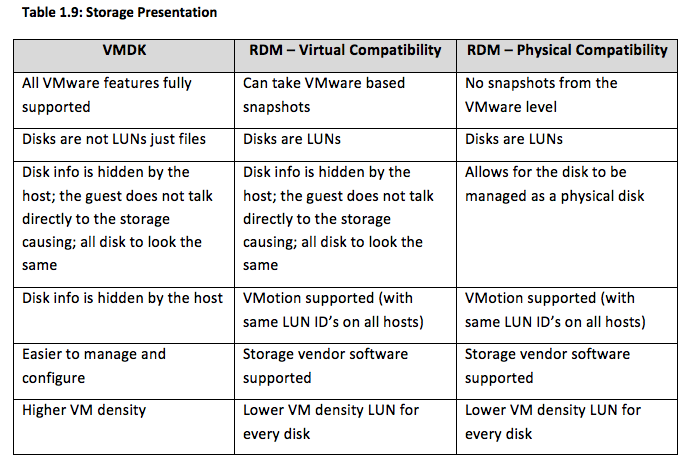

There are several different ways to present storage to a VM, with different pros and cons for each type available. Simply choosing the presentation method that supplies the fastest storage is not always the best choice. You will want to weigh performance with manageability and other features such as NetApp’s Snap Shot technologies. In general, House of Brick recommends using VMDKs whenever possible, however additional tooling at the SAN and/or network layers may influence the final decision for how storage is presented for a specific VM. More than one storage presentation option can be used for any given VM, for example the OS disk for a VM can be a VMDK and the data volumes can be RDM.

Storage Types

The following are the most common storage options available.

VMDK

A Virtual Machine Disk (VMDK) is a flat file that resides in a datastore that is accessible to the vSphere servers. Through vSphere, the VMDK is presented to the operating system as a physical disk. Using VMDKs for storage has the fewest restrictions on vSphere tooling, and any new features added to vSphere will work with VMDKs first. In addition, VMDKs are the most common storage presentation and the VMware support team will be most familiar with their use.

NFS, dNFS, iSCSI

It is possible to present IP based storage directly to the guest operating system. This is commonly referred to as “in guest storage”. Assuming networking is correctly configured at the vSphere layer, then there are little to no restrictions on the VM. This type of storage does require a reliable IP based network that is capable of high throughput and low latency. Typically 10 GE networks with multiple network interfaces aggregated for increased throughput are used for IP based storage. At the vSphere layer you will want to make sure that the storage and IP networks are using separate NICs and vSwitches. A common use case for IP based storage is to use dNFS with Oracle databases for the datafiles. The OS and binary disks are still VMDK based.

RDM

Raw Device Mapping (RDM) is a method of presenting storage from a SAN straight to the guest operating system as if it were physical. There are two compatibility modes for RDMs, virtual and physical. Once storage is presented to the guest operating system, it functions as if it were physical. Typical use cases for RDMs are for Microsoft Windows Clustering (the initial reason they were developed) or to enable SAN tooling.

There are some restrictions when using RMS. The following is quoted from the vSphere Storage 5.5 Update 2 Release Notes.

RDM Considerations and Limitations

Certain considerations and limitations exist when you use RDMs.

- The RDM is not available for direct-attached block devices or certain RAID devices. The RDM uses a SCSI serial number to identify the mapped device. Because block devices and some direct-attach RAID devices do not export serial numbers, they cannot be used with RDMs.

- If you are using the RDM in physical compatibility mode, you cannot use a snapshot with the disk. Physical compatibility mode allows the virtual machine to manage its own, storage-based, snapshot or mirroring operations.

- Virtual machine snapshots are available for RDMs with virtual compatibility mode.

- You cannot map to a disk partition. RDMs require the mapped device to be a whole LUN.

- If you use vMotion to migrate virtual machines with RDMs, make sure to maintain consistent LUN IDs for RDMs across all participating ESXi hosts.

- Flash Read Cache does not support RDMs in physical compatibility. Virtual compatibility RDMs are supported with Flash Read Cache.

Note: In point 2, the snapshot would be a VM snapshot.

There are additional restrictions when using RDMs. These restrictions do change based on version of vSphere. Please refer to the VMware Knowledge Base article 1005241 for further information.

Shared Storage

There are many instances where you will need to use shared storage so that more than one VM is able to write to the same disk. Two examples of this are Oracle Real Application Clusters (RAC) and Microsoft Windows Clusters. If you need to use either of these configurations, there are additional considerations.

For Oracle RAC, all storage types mentioned above will work. When sharing VMDKs it is possible to set what is called the multi-writer flag. This will allow more than one VM to utilize the VMDK at the same time. The IP based options actually take vSphere out of the picture and will function as if the guest OS were physical. The use of NFS and dNFS are supported for Oracle RAC databases. The use of iSCSI and FCoE are less common for Oracle RAC configurations. Finally any RDM can also be a shared disk as if in a physical environment.

For Microsoft Windows Clusters, the primary method for shared storage is the use of RDMs. Initially the use of RDMs was the only choice, however additional support for other storage presentation has been added recently. You will likely want to do additional research on the available storage presentation methods before making a decision. As mentioned previously, support for the different methods is growing, and is OS version specific and vSphere version specific.

Decision Points

So in the end, as with most things in IT, the answer to the question “what presentation method do I choose?” is… it depends.

- What hardware do you already have?

- What are the performance needs of the application and/or database?

- What are the capacity needs of the application and/or database?

- Are you attempting to use any available tooling from the SAN vendor?

- Support staff considerations. What are the administrators already familiar with and willing to support?

- Operating system, software and/or database configuration concerns/requirements?

Conclusion

In this post, we described several different options to present storage to a VM, along with a discussion of the different pros and cons for each type available in order to help you decide which options may be best for use in your environment.

References

RDM Considerations and Limitations – vSphere Storage 5.5 Update 2 Release Notes