Jim Hannan (@HoBHannan), Principal Architect

In Part 3 of vSphere 5 Advantages for VBCA, I turn my focus to Storage vMotion and its enhancements in vSphere 5. Storage vMotion capabilities were first introduced in vSphere 3 as a way to migrate from VMFS2 to VMFS3. It was re-introduced in vSphere 3.5 (after high demand from the VMware user base) as a supported way for administrators to move virtual machines from one datastore to another. The latest vSphere 5 release has undergone multiple enhancements to speed up Storage vMotion times.

Storage vMotion can be separated into two components: data movers and data mirroring. Data movers read blocks from the original location and copy them to a new destination. Data mirroring writes data to both the original VMDK location and the new location. The guest does not get a write confirm until the data has been written in both locations.

Data Movers

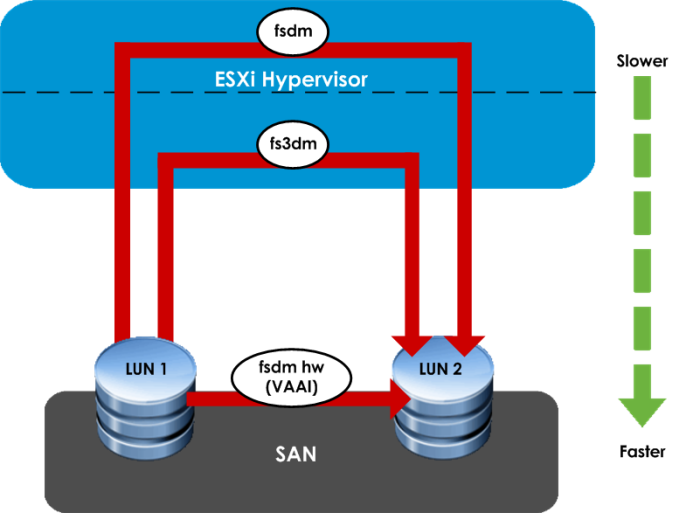

The ESX hypervisor chooses from one of three mechanisms, which greatly affects the speed of the Storage vMotion. The available data movers are:

fsdm

fs3dm

fs3dm-hw

fsdm is the slowest of the data movers, it appears to function more like an OS copy mechanism by copying files from one datastore to the next.

fs3dm is a faster mechanism. In his book, “VMware vSphere 5 Clustering Technical Deepdive”, Ducan Epping states that fs3dm moves data through fewer layers than traditional fsdm, making it faster and more efficient.

fs3dm-hw is a VAAI hardware offload, it offloads the copy to the SAN or NAS layer. I recently had the opportunity to use fs3dm-hw and it was impressively fast, 30-50% faster than fs3dm.

The hypervisor chooses the data mover to use based on a specific set of conditions. If the source and destination datastore are in the SAN, uses the same VMFS block size, and the SAN/NAS is VAAI capable, then it uses fs3dm-hw.

If all the above conditions are met but the SAN is not VAAI capable, then it resorts to fs3dm. If the block sizes are different, then the hypervisor uses the oldest (and slowest) fsdm mechanism.

House of Brick Best Practice

An in-place upgrade from VMFS3 to VMFS5 will retain the original block size of the VMDK. In VMFS5, VMware has standardized on a 1 MB block. HoB strongly recommends that customers move to the standard block size for the reasons mentioned above.

What Does This Mean For Your VBCA?

If you ask VMware Support about snapshots with a database, you will typically get a response like “It’s not a good idea”. We do not agree with that generic statement. We do, however, encourage our customers to be aware of the risks involved in creating a snapshot on a VBCA. In particular, these databases typically have high I/O. If the I/O is high enough during a snapshot, you can suffer from an overrun scenario where data over runs the snapshot deletion.

How does this happen? When snapshots become very large they can be difficult to delete. During a deletion the data may be changing faster than the snapshot can be committed. This causes an overrun. I have seen cases where the snapshot deletion was barely keeping up with data changes and the snapshot could not be deleted until the data change rate slowed. In this particular case, the snapshot deletion occurred 8 hours after it was issued.

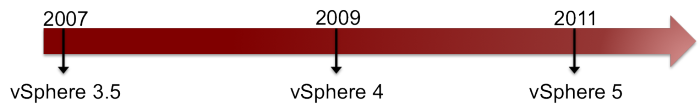

I bring this up to underscore the enhancements that VMware has made to Storage vMotion. In earlier versions (3.5) Storage vMotion relied on snapshots, which may not have been approachable for all databases or Business Critical Applications. In vSphere 4 it relied on change block tracking; more approachable but still not optimal. Currently, vSphere 5’s data mirroring truly makes it feasible to use Storage vMotion for VBCAs.

Storage vMotion Dirty Block Copy Mechanism Timeline

vSphere 3.5 used snapshots

vSphere 4 used change block tracking

vSphere 5 uses mirroring

Additional Benefits of Storage vMotion – Renaming a Virtual Machine and its VMDKs

For administrators, it can be frustrating to rename a virtual machine but have its VMDKs keep the original name. With Storage vMotion you can rename the virtual machine and its VMDKs in one operation and without downtime.

Reference: http://kb.vmware.com/selfservice/microsites/search.do?cmd=displayKC&externalId=1029513