House of Brick Principal Architect

Welcome to part three in our series of posts regarding the virtualization of your business critical SQL Servers. This post continues our discussion with details around how to architect the VMware infrastructure and the new virtual machine so that it meets or exceeds the physical server specifications. These best practices are a direct result of years of virtualizing SQL Server, and include all of our lessons learned on infrastructure and configuration.

Scale Up or Scale Out?

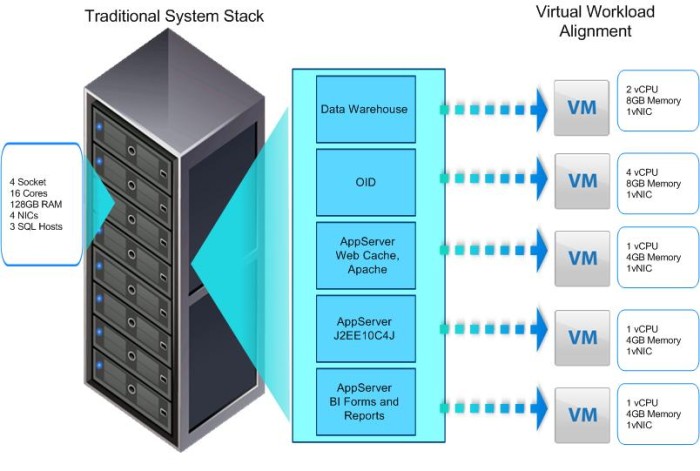

The first architectural challenge I always encounter is the discussion around scaling up or scaling out. Scaling up means more resources assigned to a single virtual machine, along with either more than one SQL Server instance or many distinct applications all connecting to each instance. In contrast, scaling out your SQL Server virtual machines means more independent virtual machines with smaller workloads.

I instinctively choose to scale out whenever possible.

If you have a huge workload already that is centralized around one application, a single workload is your only option. You could try sharding, or try using SQL Server 2012 AlwaysOn and a smart data-access layer to split your reads from your writes, but this is not usually feasible. Most organizations use virtualization to consolidate workloads onto a smaller physical footprint, but if you have a lot of huge virtual machines, you run a greater risk of encountering limitations that can induce performance problems and reduce some of the flexibility inherent with virtual machines. Such risks include:

CPU scheduling penalties from idle resource scheduling or competing thread requests.

I/O and network path bottlenecks.

Additional overhead on the ESXi hosts with resources dedicated to resource management (CPU, memory overhead).

Increased difficulty scheduling routine maintenance if a lot of applications are dependent on one database server.

On the flip side, scaling up your virtualized workloads has its advantages in certain situations. For example, certain licensing scenarios might limit the number of virtual machines you are allowed to operate. You have fewer servers to patch and manage. That means less of a security footprint, fewer IPs and broadcast traffic on the network, etc.

However, in the vast majority of the scenarios that I encounter, scaling out makes more sense. I try to stick with the atomic model whenever is appropriate.

The atomic model implies that you are treating your workloads more as single-purpose appliances than consolidated general-purpose servers. If your licensing allows, divide your workload into small units. Doing so will increase flexibility and workload balancing by keeping the workload units small.

Physical Server Architecture

First, we’ll focus on your physical hardware. I always go through the hardware with the following checklist.

At the physical server level (we’ll call it the VM host), make sure you disable any “green” settings inside the BIOS. You’re already saving quite a bit of power through the consolidation you get through virtualization. You do not want your consolidated server host running artificially slower than top performance when the CPUs are ramped up.

Make sure the CPUs are set to high performance mode. Enable virtualization extensions in your CPUs (i.e. Intel VT-x). You get the full CPU feature set exposed to VMware, which boosts performance.

Disable some form of auto-reboot on hardware issue in the BIOS as well. HP has a setting called ‘Disable Automatic Server Recovery’. This feature will physically reboot a server in the event of a hardware problem. All this does for you is re-introduce a possibly faulty server back into your server farm so that it can fail again and cause more disruptions.

Enable Hyper-Threading in your Intel-based CPUs. After Intel’s first attempt at Hyper-Threading I never thought I could say this; Intel’s first attempt was poor, and very few applications benefited from it. Today’s implementation of Hyper-Threading works much better, and I endorse enabling it.

Virtual Machine Construction

Do everything you can to ensure all of your VMs are 64-bit. VMware stopped adding performance enhancements for 32-bit servers years ago, and the latest flavors of Windows Server are 64-bit only. 32-bit servers introduce architectural difficulties with SQL Server and memory management that I want the world to put behind them.

When architecting your virtual machine, you should now have a good idea of the amount of CPU resources that you require to run your workload. Please do not assume that you will require an eight vCPU virtual machine if your workload runs on an eight-core physical server. If your virtual machine’s workload does not require (and utilize) all eight vCPUs to properly run, the overhead of the additional idle resource scheduling will actually slow your virtual machine down.

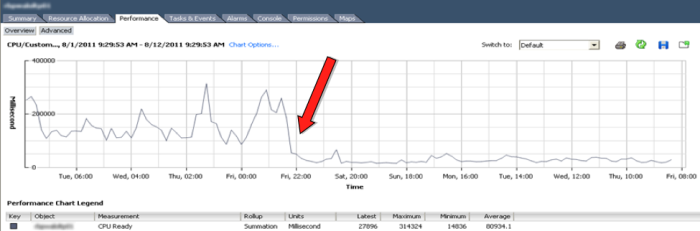

Sometimes this overhead is enough to notice with application performance. This overhead is measured in a statistic called CPU Ready Time. CPU Ready Time is a measurement of how long a vCPU has to wait in a ready-to-run state before it is scheduled for physical CPU time.

Our requirements for your business critical SQL Servers is that your CPU Ready Time never exceeds 500ms, and that the average is below 300ms. As more large VMs exist on a single server, you will experience more CPU contention, even if your CPU statistics on the host look good at a glance.

The chart above shows the impact on CPU Ready Time by simply balancing a virtual machine workload. CPU Ready Time went from abysmally terrible to just bad after we balanced the workload without making any other changes. Further work went into getting these numbers to under 250ms across the cluster.

Be conservative when you allocate vCPUs to your workloads. You can always add more if the workload requires it.

Memory

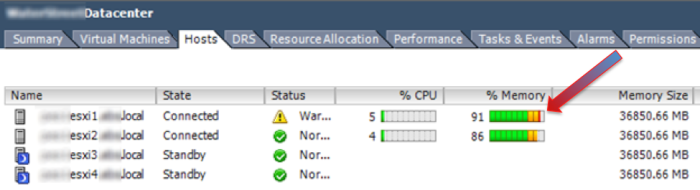

Never overcommit your business-critical workload memory on a single host. Period.

Customers rarely run out of CPU resources on a server before they run out of memory resources, and therefore they seemingly always try to overcommit memory to squeeze more VMs onto a host. All this does is force your hosts to try to reclaim memory from running VMs. If you see red at a host level, you are in trouble. It means that VMware is beginning the process to reclaim VM memory.

Guess what? SQL Server usually has the larger amount of memory on a given host, and therefore VMware will try to snag memory from that VM. SQL Server will consume all the memory you give it for its buffer cache, and generally does not like to give up that memory without a fight.

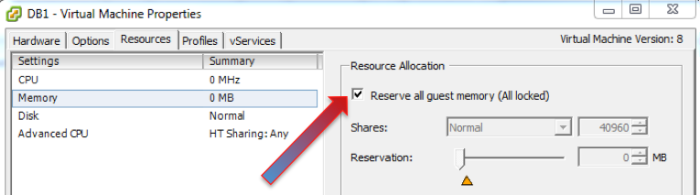

Set a full memory reservation for your business-critical SQL Servers so that you never have to worry about VMware attempting to snag memory from these VMs. Never disable the balloon driver within VMware Tools, because now you never have to worry about it!

Disk Presentation

To start with your virtual machine storage, validate your storage subsystem performance. Performance is the absolute bottom line in any virtualized environment. To be honest, I couldn’t care less what RAID type or number of disks is in your arrays. I only care about three metrics:

Maximum throughput

Maximum IOPs

Minimal latency

Performance is the top priority, and some features in arrays can offset the negative attributes of other features. Your performance for any given virtual machine must meet or exceed what you require, be it virtualized or other. I always say you need an average I/O latency under 25ms. I suggest that you have under 50% spindle busy in your disk pools. I also look for at least 60MB/s for sustained write operations (both physical and random) with a workload that has pierced your cache. The spindle busy metrics come from your SAN management interface, but the other two metrics can be quickly and easily measured with tools such as SQLIO or IOMeter.

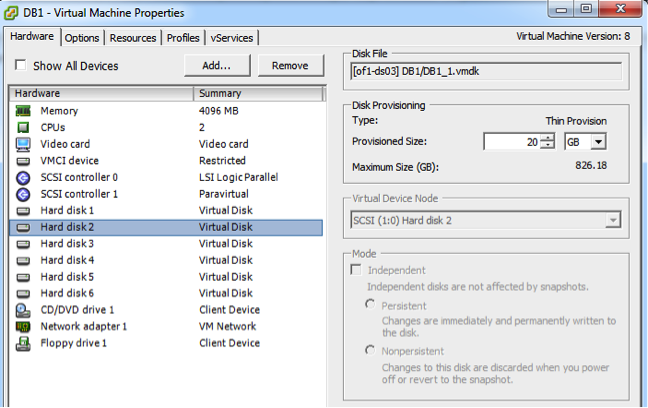

All non-operating system virtual disks attached to your SQL Server virtual machines should be using the VMware Paravirtual SCSI (PVSCSI) driver. VMware built this driver for reducing CPU overhead associated with I/O operations, and will give you a measurable performance improvement as well.

The only other design requirement that I have is that at least two paths available to any storage LUN and, if possible and/or available, multipathing drivers installed so that VMware can actively use all available paths. Tools such as EMC PowerPathVE or the Dell EqualLogic MEM driver can be used to accomplish this task.

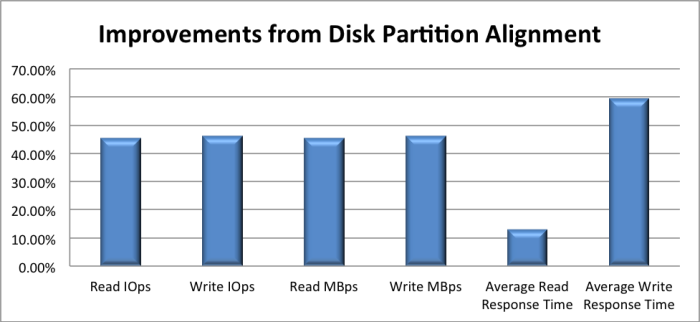

For those still running Windows Server 2003, partition disk block alignment is a major concern and is simple to fix. You could be reading two or more blocks from your SAN for one simple read within SQL Server, and that is guaranteed to slow overall performance of your SQL Server. Fixing it gives dramatic performance improvements, as the following customer study demonstrates.

Paul Randal has a great write-up on the problem here and the following MSDN article demonstrates how to fix it.

Coming Up…

In part four of this series, I discuss a number of the best practices that we follow that you should be aware of when installing and configuring your SQL Server instances inside the virtual machines. These include specific details around the operating system and SQL Server instance configuration tweaks. The final part in this series will discuss the methodology I use for demonstrating that a virtual SQL Server performs as well as the physical counterpart in an apples-to-apples comparison.

Stay tuned and check back in a couple of weeks for the next installation!