Introduction to S3 Integration for Oracle RDS

Amazon has a feature to extend how we share files with RDS instances. The feature is called S3 Integration and is intended to enhance moving files to and from RDS instances. These types of activities are especially important during a database migration onto RDS.

We could always move files to RDS using database links and UTL_FILE. This method is viable, but convoluted and clunky. And, we could not use S3 when transferring files this way. With S3 Integration for RDS, we can now use S3 as the intermediate storage for transferring Oracle Data Pump dump files and the like.

Setup Requirements for S3 Integration

There are some configuration items needed to implement S3 Integration with RDS. The two items that need to be addressed before using S3 Integration are:

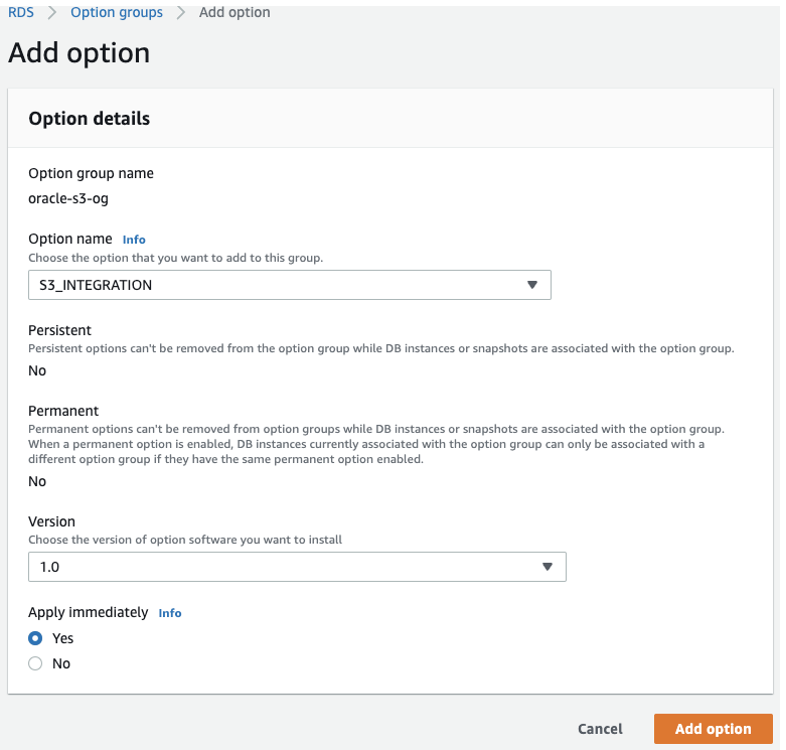

- Enable the S3 Integration feature in the Option Group for the RDS instance

- Privileges allowing the RDS instance to access the S3 bucket

The first step would be to enable the S3 Integration setting in and RDS Option Group. This can either be done for an existing Option Group or by creating a new Option Group.

If an RDS instance is running in an Option Group without the S3_Integration parameter enabled, the following error will be returned.

2022-10-14 21:27:48.075 UTC [ERROR] Unable to run S3 Integration DB task, S3 Integration is not installed on this instance.

2022-10-14 21:27:48.076 UTC [INFO ] The task failed.

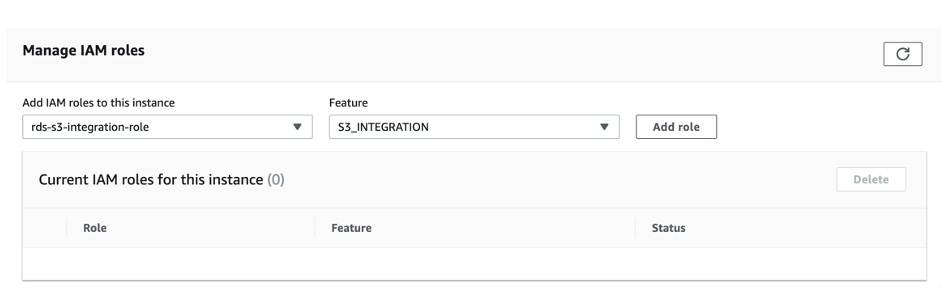

Next, the RDS instance must have privileges to access the S3 bucket. This is a common AWS configuration for many resources. The preferred way to configure this is to create a role and then assign the proper permissions for the S3 bucket to that role. Then assign the role to the RDS instance.

If an RDS instance tries to access an S3 bucket without proper privileges, the following error will be returned.

2022-09-07 20:10:32.672 UTC [ERROR] RDS doesn’t have permission to list Amazon S3 objects from bucket name mig-dp-bucket with prefix mig.dmp.

Using S3 Integration with Oracle RDS

Once all of the AWS configuration requirements have been met, RDS instances can then access files in S3 buckets. One common use case for RDS to interact with S3 is for database migrations. Oracle Data Pump dump files can be copied and stored on S3 and then used to import data into RDS.

First, we need a directory in the RDS database to hold the dump file. Login to the RDS instance and create a directory and grant permissions.

SQL> exec rdsadmin.rdsadmin_util.create_directory(p_directory_name => ‘MIG_DIR’);

PL/SQL procedure successfully completed.

SQL> grant read, write on directory MIG_DIR to rdsadmin;

Grant succeeded.

Assuming the dump file is already in the S3 bucket, we can download the file to our newly created directory in RDS using the S3 Integration command.

SQL> select rdsadmin.rdsadmin_s3_tasks.download_from_s3(

p_bucket_name => ‘mig-dp-bucket’,

p_s3_prefix => ‘mig.dmp’,

p_directory_name => ‘MIG_DIR’)

as task_id from dual;

TASK_ID

————————————————————————————————————————————

1666383920758-666

SQL> select text FROM table(rdsadmin.rds_file_util.read_text_file(‘BDUMP’,’dbtask-1666383920758-666.log’));

TEXT

————————————————————————————————————————————

2022-10-21 20:25:20.825 UTC [INFO ] This task is about to list the Amazon S3 objects for AWS Region us-east-1, bucket name mig-dp-bucket, and prefix mig.dmp.

2022-10-21 20:25:20.895 UTC [INFO ] The task successfully listed the Amazon S3 objects for AWS Region us-east-1, bucket name mig-dp-bucket, and prefix mig.dmp.

2022-10-21 20:25:20.911 UTC [INFO ] This task is about to download the Amazon S3 object or objects in /rdsdbdata/datapump from bucket name mig-dp-bucket and key mig.dmp.

2022-10-21 20:25:21.090 UTC [INFO ] The task successfully downloaded the Amazon S3 object or objects from bucket name mig-dp-bucket with key mig.dmp to the location /rdsdbdata/datapump.

2022-10-21 20:25:21.091 UTC [INFO ] The task finished successfully.

We have successfully copied the Data Pump dump file from S3 to our RDS instance. We can now run a quick test to make sure the import is successful.

$ impdp admin/manager01@migdb directory=mig_dir file=mig.dmp full=y

Import: Release 19.0.0.0.0 – Production on Fri Oct 21 20:29:47 2022

Version 19.16.0.0.0

Copyright (c) 1982, 2022, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 19c Standard Edition 2 Release 19.0.0.0.0 – Production

Legacy Mode Active due to the following parameters:

Legacy Mode Parameter: “file=mig.dmp” Location: Command Line, Replaced with: “dumpfile=mig.dmp”

Master table “ADMIN”.”SYS_IMPORT_FULL_01″ successfully loaded/unloaded

Starting “ADMIN”.”SYS_IMPORT_FULL_01″: admin/********@migdb directory=mig_dir dumpfile=mig.dmp full=y

Processing object type SCHEMA_EXPORT/USER

Processing object type SCHEMA_EXPORT/SYSTEM_GRANT

Processing object type SCHEMA_EXPORT/ROLE_GRANT

Processing object type SCHEMA_EXPORT/DEFAULT_ROLE

Processing object type SCHEMA_EXPORT/PRE_SCHEMA/PROCACT_SCHEMA

Processing object type SCHEMA_EXPORT/TABLE/TABLE

Processing object type SCHEMA_EXPORT/TABLE/TABLE_DATA

. . imported “MIG”.”MIG_TAB” 5.476 KB 24 rows

Processing object type SCHEMA_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

Processing object type SCHEMA_EXPORT/STATISTICS/MARKER

Job “ADMIN”.”SYS_IMPORT_FULL_01″ successfully completed at Fri Oct 21 20:35:06 2022 elapsed 0 00:00:15

Summary of S3 Integration Workflow

In this blog we have demonstrated the use of the S3 Integration feature with RDS. While it does extend the functionality of RDS with the ability to copy files to and from S3, it is inefficient to use for a database migration. The process of moving Oracle database data into RDS via S3 requires 4 hops.

- Use Oracle Data Pump to export the data to local storage

- Move the Data Pump dump file into S3

- Copy the Data Pump dump file from S3 to local storage on RDS

- Import the Data Pump data into the RDS database

While this does allow for using S3 storage as intermediate storage, this method requires writing the data four times, as described above. It would be much more efficient to have the ability to export the data directly to storage that can be accessed by the RDS instance. That way the data would only have to be written twice, once to the dump file and then directly to the RDS database.

For a full multi-cloud licensing view, see our post on For a full multi-cloud licensing view, see our post on Oracle on AWS, Azure, Google Cloud, and OCI.

Next Steps & Expert Support

For any other RDS Oracle S3 integration questions, reach out to our team of experts at House of Brick today.

Frequently Asked Questions

It’s a feature that lets Oracle RDS use Amazon S3 as intermediate storage for file transfers—such as Oracle Data Pump dump files—simplifying migrations and data movement.

You must enable the S3 Integration option in your RDS Option Group and assign an IAM role with S3-bucket permissions to the RDS instance.

You’ll see an error like:

Unable to run S3 Integration DB task, S3 Integration is not installed on this instance.

“` :contentReference[oaicite:9]{index=9}

Create an IAM role with the necessary S3 permissions, then attach that role to your RDS instance so it can list and download objects.

Download with:

SELECT rdsadmin.rdsadmin_s3_tasks.download_from_s3(

p_bucket_name => ‘mig-dp-bucket’,

p_s3_prefix => ‘mig.dmp’,

p_directory_name => ‘MIG_DIR’)

AS task_id FROM dual;

“` :contentReference[oaicite:11]{index=11}

Then import using Data Pump, for example:

impdp admin/manager01@migdb directory=mig_dir dumpfile=mig.dmp full=y