by Jeff Stonacek, Principal Architect

I recently completed an engagement where the client was interested in moving from on-premises hardware to the public cloud. The main application is an ERP with multiple large Oracle databases for the backend. The Oracle databases consume very high amounts of I/O and memory. Using less memory drives up I/O even farther. As a whole, the production applications and databases are consuming about 200,000 IOPS and three TB of memory.

The IOPS and memory consumption is spread across multiple databases and four physical hosts. Each host in the current configuration has 48 cores and 1.5 TB of memory, for a total of 6 TB of memory. The processors in this environment are grossly oversized, peaking at under 20% CPU utilization. Under normal circumstances, we would break each workload into component parts and fit the pieces onto Amazon EC2 instances. However, due to licensing concerns, we had to use Oracle core-based licensing, which required us to size the workload onto EC2 dedicated hosts.

At first, this did not seem like a daunting task, but once the number crunching started, it became apparent that memory was going to be an issue.

EC2 Host Types

When sizing compute hardware for expensive Oracle licenses, the goal is to use the fastest processors available. Specifically, when sizing Intel processors, there is a wide range of core-for-core performance differences between the processor models in the same family. Take the following example:

- Intel Gold 6242 sixteen core processor, SpecIntRate2017 score of 215 for a two-socket server, or 6.7 per core

- Intel Gold 6244 eight core processor, SpecIntRate2017 score of 134 for a two-socket server, or 8.4 per core

The Gold 6244 processor is roughly 25% faster, core-for-core, then the Gold 6242. This equates to more efficient use of core-based licenses, and can save real money in a large environment.

With EC2 however, we don’t have an unlimited selection of processor types, so we need to work within the offerings that AWS provides. The EC2 host type, which would work best for the workload in question, is the i3en instance. This is due to the 60 GB of NVME storage that comes with the host, which solves the high IOPS requirement of the workload. The i3en instance uses a 24-core Intel scalable processor. While not an ideal processor for Oracle workloads, the included NVME storage hosts do provide the I/O performance required for this workload.

Memory Issue

With the I/O problem solved, the next issue is memory. The i3en dedicated hosts come with 768 GB of memory, which works out to 16 GB of memory per core. Remember, the i3en hosts have 48 physical cores. This seems like a substantial amount, but with our requirement of 3 TB of memory for the production application, this equates to four i3en dedicated hosts, just for the production workload (192 total cores for production).

The EC2 z1d instance type runs on really fast 12-core Intel Scalable processors, which are ideally suited for workloads with core-based licensing requirements. However, the z1d dedicated hosts only come with 384 GB of memory. These hosts have a memory density of 16 GB per core, the same as the i3en hosts. Even though the z1d processors are better suited for Oracle workloads, the memory density would require the same number Oracle licenses, which makes their use cost prohibitive.

In order to get the required memory from the i3en hosts for the Oracle databases, we had to overprovision CPU by a factor of four. Based on CPU consumption for the existing hardware, this workload could run on a fourth of the cores proposed in the i3en solution.

If we size this environment using on-premises hardware, with the Intel scalable 6244 eight-core processor, we would have enough CPU headroom with four 16-core servers. This option equates to 64 cores, or 32 Oracle processor licenses with the 0.5 core factor. With on-premises hardware, we have the flexibility of using larger memory chips, and can easily configure on-premises hardware with 768 GB of memory per host to achieve the required memory density.

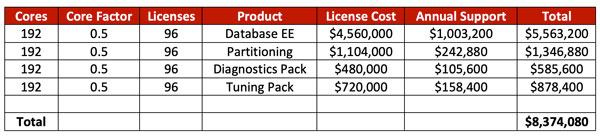

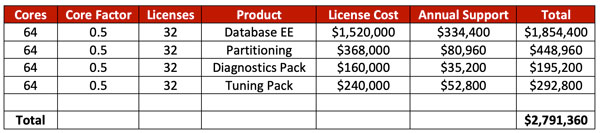

To put this in perspective, below is a comparison of what the Oracle licensing would cost at list price, between the two scenarios.

EC2 i3en Option

On-Premises Option

One solution would be to use the i3en hosts and disable a portion of the cores to bring the core count in line with the memory density needed. While it is possible to optimize the CPU count for a given EC2 instance, it is not possible to reduce the core count on a dedicated host. So, reducing core count is not an option.

Another solution would be to break the databases down into manageable chunks, and run the workload on standard EC2 instances. While this would give us more CPU and memory flexibility, we would have to license the instance with Oracle’s Cloud Licensing Policy.

A detailed discussion about this policy is beyond the scope of this blog[1], but suffice it to say that using Oracle’s Cloud Licensing Policy provides exactly half as many CPUs per Oracle license than typical core-based licenses.

Conclusion

It is understandable that AWS sizes its hosts the way they do. For standard workloads, even memory intensive ones, the memory sizes usually work well. However, with the memory density of the EC2 hosts at about 16 GB per CPU core, and on-premises hardware easily able to support 32 – 64 GB of memory per core, workloads requiring very large memory footprints can be challenging to size in Amazon EC2 instances.

Much of what we do at House of Brick deals with large enterprise-class Oracle databases. It is the norm to deal with very large memory requirements with these types of databases. Unfortunately, outlier workloads are sometimes difficult to size on rigid, public cloud infrastructure.

[1] See Debunking the Top 5 Myths of Running Oracle Software in the Cloud for more information on the policy document Licensing Oracle Software in the Cloud Computing Environment.