Jim Hannan (@HoBHannan), Principal Architect

I strongly believe one of the most beneficial exercises an IT staff can undergo is benchmarking. There are truly some substantial benefits to benchmarking:

- Building of baselines

- Establishing key metrics

- Communicating the key metrics across the organization

- Identifying scalability

- Testing for Tier 1 readiness

- Validating the infrastructure (network, storage, CPU, software, drivers, and configurations)

I think many organizations agree with this concept, but benchmarking can be resource intensive and inaccurate if done improperly. This tends to deter organizations from doing this kind of work.

Because I am a consultant, I have the benefit of working with many different organizations. I get to see what works and what does not. In fact, I spend quite of bit of time with customers doing benchmarking and Tier 1 readiness testing. In this blog I will discuss the various methods and present what works and what does not.

Why benchmark? Benchmarking provides a measurement of your hardware and scalability. Often during benchmarking, customers expose weaknesses or misconfiguration. Additionally, benchmarking establishes baselines for future benchmarking and performance troubleshooting. But most importantly, benchmarking tests the platform for Tier 1 readiness.

I think it is important to clarify a few things before continuing on. What does it mean to baseline? A baseline establishes a performance profile when things are running well. The baseline can record things like TPS (transactions per second), I/O peaks, CPU usage, maximum I/O throughput, and many other performance indicators. The baselines can come from tools like AWR reports, NMON statistics (OS metrics), storage tools, and user response time.

What does Tier 1 readiness mean? Tier 1 readiness is simply a key set of benchmarks designed to validate and record the performance of a platform (hardware and software) in its ability to service the workloads.

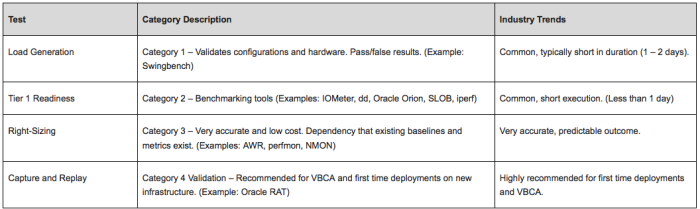

There are several different approaches to benchmarking. In the table below we categorized the different approaches and identified the level-of-effort for each category. A more detailed description of each test is below the table.

LOAD GEneration

Working from the top down, lets look at Load Generation techniques. Load Generation tools like Swingbench and even Spec 2006 (CPU/hardware analysis) have a preset of tests. They may not behave anything like your database or application. They have the benefit of being typically the easiest to setup. They fall short in accuracy of results. That said, people who are very familiar with Swingbench can accurately measure performance by running tests across many different hardware configurations. The results have a point of reference – meaning that they can compare the previous results to the new results.

TIER 1 Readiness

I should tell you that some of my favorite tools live in this category. These tests tend to be very focused on particular elements like, for example, storage, network, or CPU. I like to tell customers these test expose the tipping points. What I mean by that is, these tests give you the absolute best case for throughput. Another reason that I am a really big proponent of these tests is because they are the most approachable. They do not take a lot of time to setup. They don’t need an Oracle installation. In fact, our SQL Server team will use a lot of the same tools.

Right-Sizing

It could be argued that right-sizing does not fit into the benchmarking category, but I would disagree with that argument. It’s a process, and probably the most important of the four. After several edits of this paragraph, I realized something. I was originally going to say that right-sizing is a virtualization only step. That is simply not true. Right sizing is just as important for physical hardware as it is for virtual.

What data do you need for right-sizing? For Oracle, customers are most successful when they have collected OS metrics and AWR reports. The metrics should be collected every day. And metrics should exist as far back as a month ago – or even a year ago.

Why so much data? For your business critical applications, understanding their peaks is important. For example: what does month end look like? Does the application get very busy around Christmas or the beginning of the school year?

Capture and Replay

This is the most accurate of the testing options available. A capture and replay benchmark takes the application code and data and tests it on the new hardware. I have seen all kinds of various sets of tests. Some customers only replay critical or most prevalent SQL – others test all SQL over a defined period.

SUmmary

I mentioned in the beginning of the blog that I had the luxury of working with many different customers. I get to see what works and, in some cases, what does not work as well. For most customers a combination of the 4 different approaches in the right mix. Understanding when to use the appropriate tool is important. Additionally, the customers that are most successful with benchmarking have adopted their own methodology and processes. This allows the organization to be clear about what the key metrics are and how to run the test consistently each time. This is a deep topic with host of considerations. If you have questions or comments, I can be reached via Twitter @HoBHannan.