Jim Hannan (@HoBHannan), Principal Architect

What is VBCA?

What is Virtualizing Business Critical Applications (VBCA)? A Business Critical Application is exactly what it sounds like, an application that is critical to business success and day-to-day operations. Without it, businesses would struggle to function. Common VBCA applications are: Oracle RDBMS, SQL Server, Exchange, and SAP to name a few.

I believe vSphere 5, as much as anything else, is a VBCA release. What I mean by that is, when you compare it to previous releases the new features are primarily enhancements for providing a platform for VBCAs. VBCA workloads typically have large resource requirements like CPU or memory and, in the case of databases, I/O throughput. Creatively, this type of workload has been dubbed by VMware as a ‘Monster VM’.

With vSphere 5, VMware has created a platform more than capable of running Monster VMs. This blog, and the next 5-6 following it, will highlight some of the key features that, in my opinion, give vSphere 5 the accolade of a “VBCA release”.

Part I – Scalable Virtual Machines and Enhanced co-scheduler

At this point it is very well known that the maximum vCPUs a single VM can run is 32 vCPUs. For me, this number is staggeringly high. At HoB, we have been virtualizing VBCA workloads as far back as ESX versions 3 and 4, with a maximum of 4 and 8 vCPUs respectively. In our estimation, 90% of the workloads can fit into a configuration of 8 vCPUs (or fewer). At Indiana University we experienced this first hand when assisting them with virtualizing their OnCourse system. The OnCourse Oracle database was previously on an AIX Power5 LPAR with 12 CPUs allocated. During peak workloads the Oracle database was consuming 9.5 processors. After virtualizing the workload and load testing, we determined not only that the workload would fit without the 8 vCPU max, but it was only using between 35% – 50% of the CPU. This left lots of scalability for the database. Fast forward to today. With a maximum of 32 vCPUs, the door has opened for virtualizing 95% of the workloads in existence.

How did VMware make the jump from 8 vCPU maximum to 32 vCPU maximum?

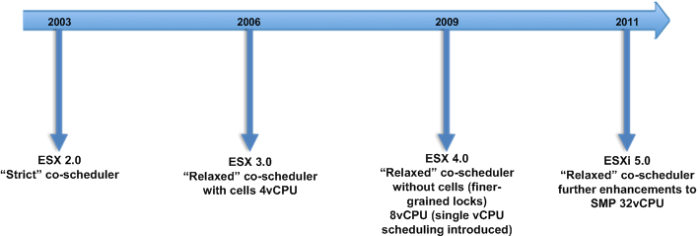

This is an intriguing question. The best public information available on this achievement is discussed the VMware Whitepaper – The CPU Scheduler in VMware ESX 4. The “relaxed” co-scheduler was first introduced in ESX version 3.0 with a maximum single VM vCPU configuration of 4. In this version, the VMware engineers adapted a cell model. The cells were assigned to pCPU (physical CPU). A common processor configuration during the ESX 3 release was a physical processor with 4 cores. As pCPU core counts increased with AMD and Intel chips, VMware determined that the cell model was no longer adequate.

In ESX 4 the VMware engineers moved from the 4 vCPU limit to 8 vCPU by eliminating the cell architecture to finer-grained locks. This allowed a single VM to span multiple pCPU and allows for scheduling of one vCPU for certain task. This gives the guest OS the ability to schedule one vCPU for single process or thread and greatly reduces the overhead or cost of CPU scheduling from the previous ESX version.

ESXi 5 further enhanced SMP scheduling, increasing SMT application performance. This increase in some cases can reach up to 10% – 30% (see What’s New in Performance in VMware vSphere 5.0). And, of course, the new 32 vCPU maximum per a single VM increased from the previous maximum of 8 vCPUs.

Here’s the evolution timeline of the co-scheduler: