Jim Hannan (@HoBHannan), Principal Architect

My next few blog posts will feature VMFS, In-guest, and RDM storage presentations. This will be a comparison of the different methods, how they differ, and what tooling options are available. In the final post I will review some Use Cases we have implemented.

VMFS vs. RDM Part I

Storage is one of the most important components when providing infrastructure to business critical applications–databases in particular. Most DBAs and administrators are well aware of this. In conversations with our customers, we are asked a common question during the design stage:

“Should I use VMFS, RDM or In-Guest storage?”

The question refers to storage presentation types. Storage presentation is the methodology used to present storage to your workloads. Here are the storage options available to you:

VMFS

NFS

RDM-V

RDM-P

In-guest

I like this question because it leads into important conservations far beyond just performance. You are also deciding on tooling. The choice of tooling will decide what options you have available. Are you going to use SAN level snapshots, will the database files exist on volumes that can use SAN tools for cloning and backups? Will you be using VMware SRM (Site Recovery Manager), Fault Tolerance, vCloud Director, or VMware Data Director? This should all be considered will selecting the storage presentation type.

Performance

Before we get into tooling considerations, we should talk about performance. In my experience, the storage presentations mentioned above all offer Tier 1 performance. Implementation is key in determining whether a storage type offers good performance. Understanding your storage vendor’s best practices is fundamental to good performance. For example, iSCSI and NFS are optimized to use Jumbo Frames. If you skip this procedure of enabling jumbo frames, your storage will run at half the throughput it is capable of.

What about RAID level? It is an interesting time in the world of storage, MetaLUNs and SSD caching have made the way we approach storage much different than 5 years ago when high-end storage was always built on RAID10 with separation of “hot” files onto separate LUNs. We at HoB stay away from the generic recommendation that your (business critical) database must live on RAID 10 for simply the reasons mentioned above.

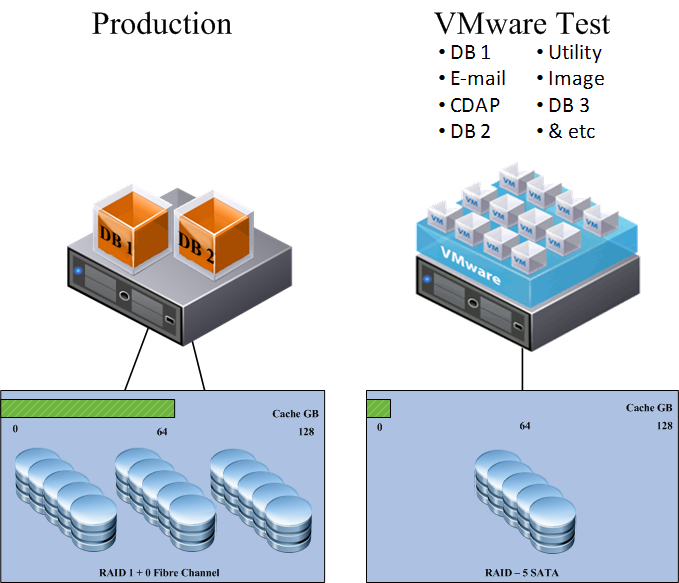

The graphic below is something that we often run into when helping customers implement storage infrastructure. We call it our Apple and Oranges Comparison. Within 5 seconds of showing this slide we typically see storage and vSphere administrators heads nodding up and down in agreement.

The comparison depicts a traditional physical environment with dedicated storage that is not shared. Adversely, the VMware environment is setup to share storage with many virtual machines. The fundamental difference that we find is that the vSphere environment is built with consolidation exclusively in mind, and this is flawed. Do not misunderstand our message — building for heavy consolidation is a great way to build a vSphere cluster or clusters. But for your Tier 1 workloads you will need to think differently, the same procedures and consolidation ratios will not always work to meet business critical application SLAs.

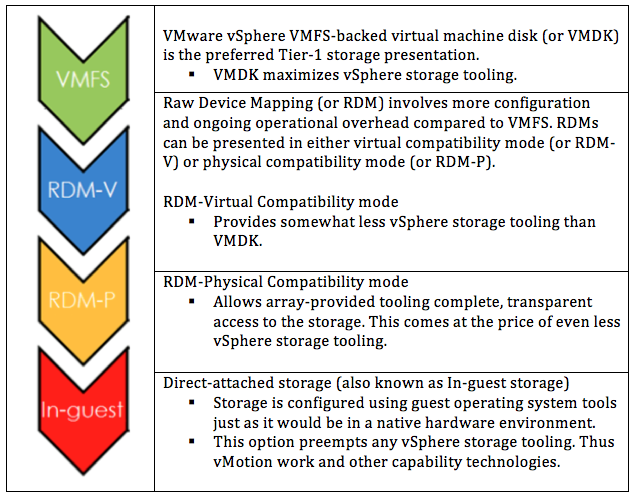

Consider Tooling

We encourage customers to think about two criteria when considering their decision: performance (previously discussed) and tooling. For tooling, VMFS offers the maximum number of options, there really is no limitation to vSphere tooling or it’s complementary products like VMware SRM or vCloud Director. The other storage presentation RDMs and in-guest storage options do limit you on VMware tooling. An example of this is VMware Fault Tolerance. VMware FT is currently supported only with VMFS. Below is a diagram Dave Welch, our CTO and Chief Evangelist, put together. I like this slide because it simply displays the four primary storage presentation types. The top of the slide starts with our preferred storage type and finishes with our least recommended storage solution.

IMPORTANT: This does not mean that one of the storage presentation types is the only best practice or one is simply wrong for your IT organization. In fact, we assisted with implementations that have used one of each of the storage types. Our general rule is that we prefer VMFS over all other storage options until inputs lead us elsewhere.

It should be noted that there are two flavors of RDMs (physical and virtual). I will discuss the specific of each later in the blog.

You are probably wondering what the difference is between RDM-P and RDM-V? And what is In-Guest storage? First let us look at RDMs. Part II will explore In-Guest storage, and Part III of this series will discuss use cases for the different storage presentation types.

RDM-P and RDM-V

You can configure RDMs in virtual compatibility mode (or RDM-V), or physical compatibility mode (or RDM-P).

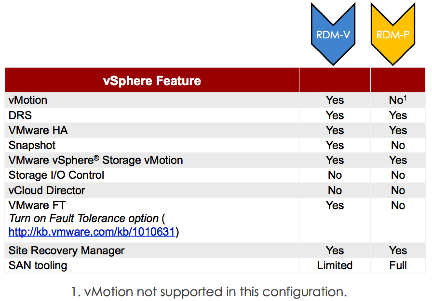

RDM-V specifies full virtualization of the mapped device. In RDM-V mode the hypervisor is responsible for SCSI commands. With the hypervisor as the operator for SCSI commands, a larger set of virtualization tooling is available.

In RDM-P the SCSI commands pass directly through the hypervisor unaltered. This allows for SAN management tooling of the LUN. The cost of RDM-P is losing vSphere snapshot-based tooling.

Note: The exception to this is the REPORT LUN command. REPORT LUN is used for LUN ownership isolation. For more information, see the online vSphere Storage documentation under the topic RDM Virtual and Physical Compatibility Modes.

A common implementation for use of RDM-Physical is for SCSI reservation technologies like Microsoft Clustering. A SCSI reservation is a way for a host to reserve exclusive access to a LUN in a shared storage configuration.

Oracle Cluster Services does not use SCSI reservations. Instead, Oracle relies on its own software mechanisms to protect the integrity of the shared storage in a RAC configuration. HoB recommends RDM-V rather than RDM-P for Oracle RAC configurations for those shops that choose not to follow the VMDK recommendation.

RDM-P allows SAN tooling access to the storage. For this reason, shops that heavily leverage such tooling might be tempted to configure RDM-P

Finally I leave you with a comparison or RDM-P vs. RDP-V. In the next blog I will cover In-guest storage and begin to discuss some storage presentation use cases.