Nathan Biggs (@nathanbiggs), CEO

Friends of House of Brick:

In early 2014 we set out to dramatically increase the amount and quality of information that we share with our network. We wanted to make sure that what we share with our customers, partners, prospective customers, and yes—even our competitors—was immediately applicable and useful. That attitude defines how we do things at House of Brick.

Rather than engaging in hard-sell tactics, we try to immediately help our contacts understand and find solutions for their most vexing business-critical IT challenges. Occasionally those challenges are only technical (pause for polite chuckle). More frequently, those challenges are a complex and dynamic mix of people (personalities, politics, communication, skill levels, silos), process (or typically lack thereof), and technology (the appropriate application of infrastructure, platform, application and support software, user interface, and management controls). We have estimated that 80% or more of the work we do for customers is not technical, but falls into those other two categories. That also defines House of Brick—bringing the best possible IT solutions to our customers, for the lowest effective price.

We thought that a great way to end the year would be to publish our top-3 most popular blog posts from 2014. I would like to share those with you — one each week for the remainder of 2014 — and give you some perspective on why I think they were so popular.

Here’s to a successful 2015 for all our friends. Thank you for making our work so rewarding.

#3— VMFS vs. RDM, by Jim Hannan

Jim Hannan is one of our Principal Architects at House of Brick. Perhaps more than some of our other PA’s, Jim seems to gravitate toward the customer engagements that require a particularly sensitive approach to each area of dynamic focus—people, process, and technology. This blog post by Jim was our third most viewed post of the year, and you can see why it was so popular. The solutions to a complex problem in one environment may actually be the cause of more serious problems in another environment.

Please enjoy reading Jim’s blog post again.

Nathan

VMFS VS. RDM (PART I)

By Jim Hannan (@HoBHannan), Principal Architect

Originally posted: July 3, 2014

My next few blog posts will feature VMFS, In-guest, and RDM storage presentations. This will be a comparison of the different methods, how they differ, and what tooling options are available. In the final post I will review some use cases we have implemented.

Storage is one of the most important components when providing infrastructure to business critical applications–databases in particular. Most DBAs and administrators are well aware of this. In conversations with our customers, we are asked a common question during the design stage:

“Should I use VMFS, RDM or In-Guest storage?”

The question refers to storage presentation types. Storage presentation is the methodology used to present storage to your workloads. Here are the storage options available to you:

- VMFS

- NFS

- RDM-V

- RDM-P

- In-guest

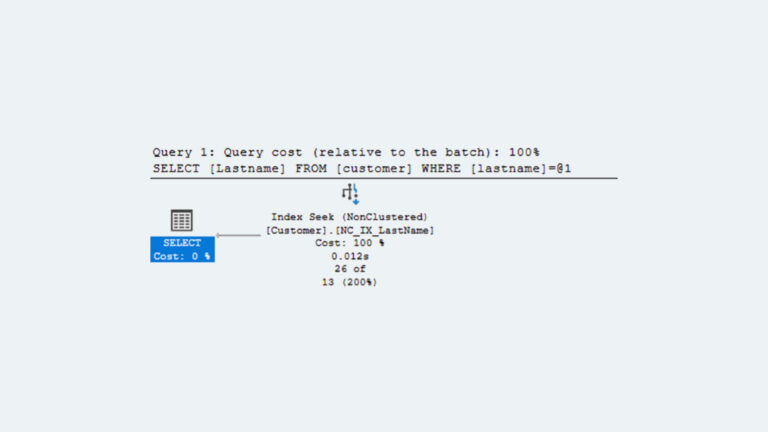

I like this question because it leads into important conversations far beyond just performance. You are also deciding on tooling. The choice of tooling will decide what options you have available. Are you going to use SAN level snapshots, will the database files exist on volumes that can use SAN tools for cloning and backups? Will you be using VMware SRM (Site Recovery Manager), Fault Tolerance, vCloud Director or VMware Data Director? This all should be consider will selecting the storage presentation type.

Performance

Before we get into tooling considerations, we should talk about performance. In my experience, the storage presentations mentioned above all offer tier-1 performance. Implementation is the key factor in determining if a storage type offers good performance. Understanding your storage vendor’s best practices is fundamental to good performance. For example iSCSI and NFS are optimized to use Jumbo Frames. Skip the procedure of enabling jumbo frames and your storage will run at half the throughput it is capable of.

What about a RAID level? It is an interesting time in the world of storage. MetaLUNs and SSD caching have made the way we approached storage much different than 5 years ago where high-end storage was always built on RAID10 with separation of “hot” files onto separate LUNs. We at HoB stay away from the generic recommendation that your database (business critical) must live on RAID 10 for simply the reasons mentioned above.

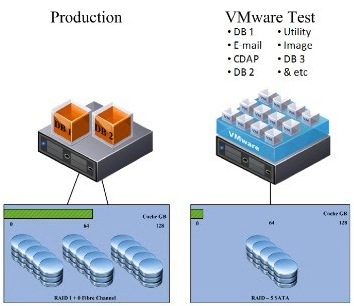

Below is something we often run into when helping customers implement storage infrastructure. We call it our apple and oranges comparison. Within 5 seconds of showing this slide we typically see storage and vSphere administrators heads nodding up and down in agreement.

The comparison depicts a traditional physical environment with dedicated storage that is not shared. Adversely, the VMware environment it setup to share storage with many virtual machines. This is fundamental, and where we find flaws with vSphere environments built with consolidation exclusively in mind. Do not misunderstand our message – building for heavy consolidation is a great way to build a vSphere cluster or clusters. However for your tier-1 workloads, you will need to think differently, the same procedures and consolidation ratios will not always work when meeting business critical application SLAs.

Consider Tooling

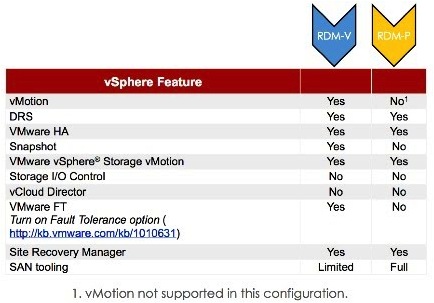

We encourage customers to think about two criteria when considering their decision: performance (previously discussed) and tooling. For tooling, VMFS offers the maximum tooling there really is no limitation when looking at vSphere tooling or its complementary products like VMware SRM or vCloud Director. The other storage presentation RDMs and in-guest storage options however, do limit you on VMware tooling. An example of this is VMware Fault Tolerance. VMware FT is currently supported only with VMFS. Below is a diagram Dave Welch, our CTO and Chief Evangelist, put together. I like this slide because it simply displays the four primary storage presentation types. The top of the slide starts with our preferred storage type and finishes with our least recommended storage solution.

IMPORTANT: This does not mean that one of the storage presentation types is the only best practice or one is simply wrong for your IT organization. In fact, we assisted with implementations that have used one of each of the storage types. Our general rule is to prefer VMFS over all other storage options until inputs lead us elsewhere.

It should be noted that there are two flavors of RDMs physical and virtual. I will discuss the specific of each later in the blog.

- VMDK maximizes vSphere storage tooling.

Raw Device Mapping (or RDM) involves more configuration and ongoing operational overhead compared to VMFS. RDMs can be presented in either virtual compatibility mode (or RDM-V) or physical compatibility mode (or RDM-P).

RDM-Virtual Compatibility mode

- Provides somewhat less vSphere storage tooling than VMDK.

RDM-Physical Compatibility mode

- Allows array-provided tooling complete, transparent access to the storage. This comes at the price of even less vSphere storage tooling.

Direct-attached storage (also known as In-guest storage)

- Storage is configured using guest operating system tools just as it would be in a native hardware environment.

- This option preempts any vSphere storage tooling. Thus vMotion work and other capability technologies.

You are probably wondering what the difference is between RDM-P and RDM-V? And what is In-Guest storage? First let us look at RDMs and than In-Guest storage. In Part III of the blog post I will discuss use cases for the different storage presentation types.

RDM-P and RDM-V

You can configure RDMs in virtual compatibility mode (or RDM-V), or physical compatibility mode (or RDM-P).

RDM-V or Virtual Compatibility Mode, specifies full virtualization of the mapped device. In RDM-V mode the hypervisor is responsible for SCSI commands. With the hypervisor as the operator for SCSI commands, a larger set of virtualization tooling is available.

In RDM-P or Physical Compatibility Mode, the SCSI commands pass directly through the hypervisor unaltered. This allows for SAN management tooling of the LUN. The cost of RDM-P is losing vSphere snapshot-based tooling.

Note: The exception to this is the REPORT LUN command. REPORT LUN is used for LUN ownership isolation. For more information, see the online vSphere Storage documentation under the topic RDM Virtual and Physical Compatibility Modes.

A common implementation for use of RDM-Physical is for SCSI reservation technologies like Microsoft Clustering. A SCSI reservation is a way for a host to reserve exclusive access to a LUN in a shared storage configuration.

Oracle Cluster Services does not use SCSI reservations. Instead Oracle relies on its own software mechanisms to protect the integrity of the shared storage in a RAC configuration. HoB recommends RDM-Virtual rather than RDM-Physical for Oracle RAC configurations for those shops that choose not to follow the VMDK recommendation.

RDM-Physical allows SAN tooling access to the storage. For this reason, shops that heavily leverage such tooling might be tempted to configure RDM-P.

Finally, I leave you with a comparison or RDM-P vs RDP-V. In the next blog I will cover In-guest storage and begin to discuss some storage presentation use cases.